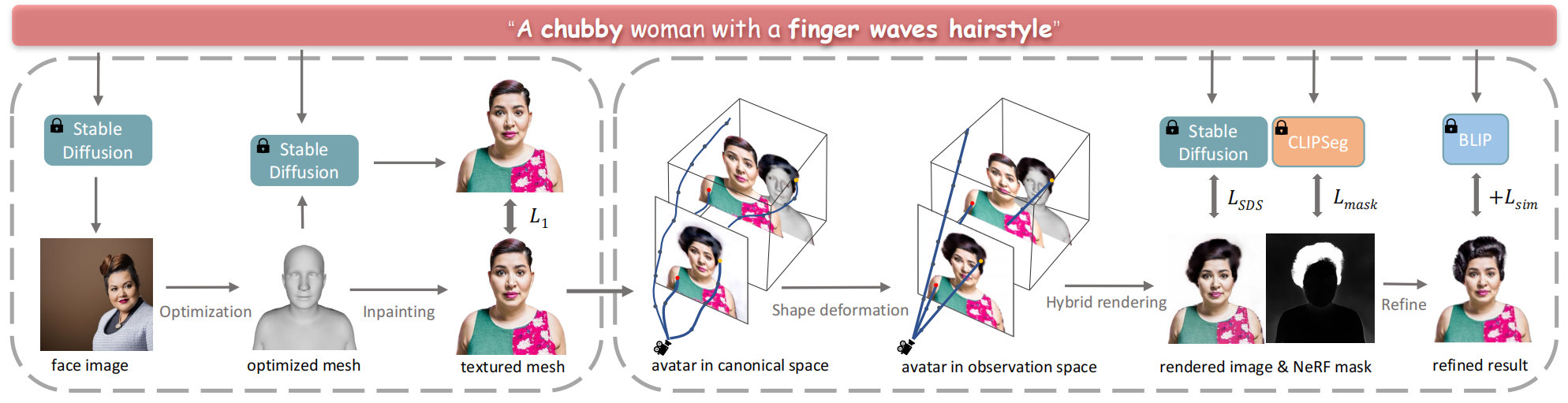

Given a text description, our method produces a compositional 3D avatar consisting of a mesh-based face and body and NeRF-based hair, clothing and other accessories.

Our goal is to create a realistic 3D facial avatar with hair and accessories using only a text description. While this challenge has attracted significant recent interest, existing methods either lack realism, produce unrealistic shapes, or do not support editing, such as modifications to the hairstyle. We argue that existing methods are limited because they employ a monolithic modeling approach, using a single representation for the head, face, hair, and accessories. Our observation is that the hair and face, for example, have very different structural qualities that benefit from different representations. Building on this insight, we generate avatars with a compositional model, in which the head, face, and upper body are represented with traditional 3D meshes, and the hair, clothing, and accessories with neural radiance fields (NeRF). The model-based mesh representation provides a strong geometric prior for the face region, improving realism while enabling editing of the person’s appearance. By using NeRFs to represent the remaining components, our method is able to model and synthesize parts with complex geometry and appearance, such as curly hair and fluffy scarves. Our novel system synthesizes these high-quality compositional avatars from text descriptions. Specifically, we generate a face image using text, fit a parametric shape model to it, and inpaint texture using diffusion models. Conditioned on the generated face, we sequentially generate style components such as hair or clothing using Score Distillation Sampling (SDS) with guidance from CLIPSeg segmentations. However, this alone is not sufficient to produce avatars with a high degree of realism. Consequently, we introduce a hierarchical approach to refine the non-face regions using a BLIP-based loss combined with SDS. The experimental results demonstrate that our method, Text-guided generation and Editing of Compositional Avatars (TECA), produces avatars that are more realistic than those of recent methods while being editable because of their compositional nature. For example, our TECA enables the seamless transfer of compositional features like hairstyles, scarves, and other accessories between avatars. This capability supports applications such as virtual try-on.

Virtual Try-on:

Generated hair, clothes and other accessories can be transferred directly to the target avatar for virtual try-on application.

Animation: Leveraging the SMPL-X model, we can easily control the avatar for the animation application.

This work was partially supported by the Max Planck ETH Center for Learning Systems.

MJB has received research gift funds from Adobe, Intel, Nvidia, Meta/Facebook, and Amazon. MJB has financial interests in Amazon, Datagen Technologies, and Meshcapade GmbH. While MJB is a consultant for Meshcapade, his research in this project was performed solely at, and funded solely by, the Max Planck Society.

@article{zhang2023teca,

author = {Zhang, Hao and Feng, Yao and Kulits, Peter and Wen, Yandong, and Thies, Justus and Black, Michael J.},

title = {TECA: Text-Guided Generation and Editing of Compositional 3D Avatars},

journal = {arXiv},

year = {2023},

}